HOW DO WE LABEL DATA?

How do we label data?

Annotation Process: humans label data

- Expensive: cost money and time

- Error prone:

- repetitive task

- sometimes it can be very hard

How do we label data?

Annotation Process: humans label data

- Expensive: cost money and time

- Error prone:

- repetitive task

- sometimes it can be very hard

- You must validate

BIAS

– We need a lot of data

– We also need to have a good balance between

BIAS

- Simulate less frequent cases:

- Weather,

- Illumination

- Shape

- Pose

- etc

Copyright

Privacy

- Data might be related to people

- Might have enormous consequences

- Regulations

Creating large scale databases is hard

Technical

- Need to annotate data

- Must have sufficent examples for each class

- Must guarantee class balance

Ethical

- Copyright issues

- Privacy concerns

Synthetic Data Matters!

Before we dive in...

Some approaches to the data problem

– Unsupervised / Self-supervised learning

– Data augmentation

– Synthetic datasets

Data augmentation

Real data transformations that don't change the target label

- Flip, rotate, resize, scale...

There are studies on generative methods for data augmentation

- Could it make the data distribution larger?

Synthetic datasets

Synthetic data advantages

-

Automatic annotation

-

Control over the amount of examples and class balancing

-

Don't need to be related to real people (privacy)

-

Copyright

-

Data democratization

AI Graphics

-

Bridge between computer vision and computer graphics

-

Shapenet: synthetic but build with human annotations

Beforehand Questions

- How much realism do we need?

- Do we still need real data?

- How much effort to make it work well on real scenarios?

- How about the effort to generate synthetic data?

- Who is using it?

BMW Factory Digital Twin

Who is using it?

The Reality Gap

The Reality Gap

- Discrepancy between reality and synthetic data

- It's hard to reproduce the richness of the real world

- Subtle details as real data noise can also be a barrier

How much does it impact computer vision tasks?

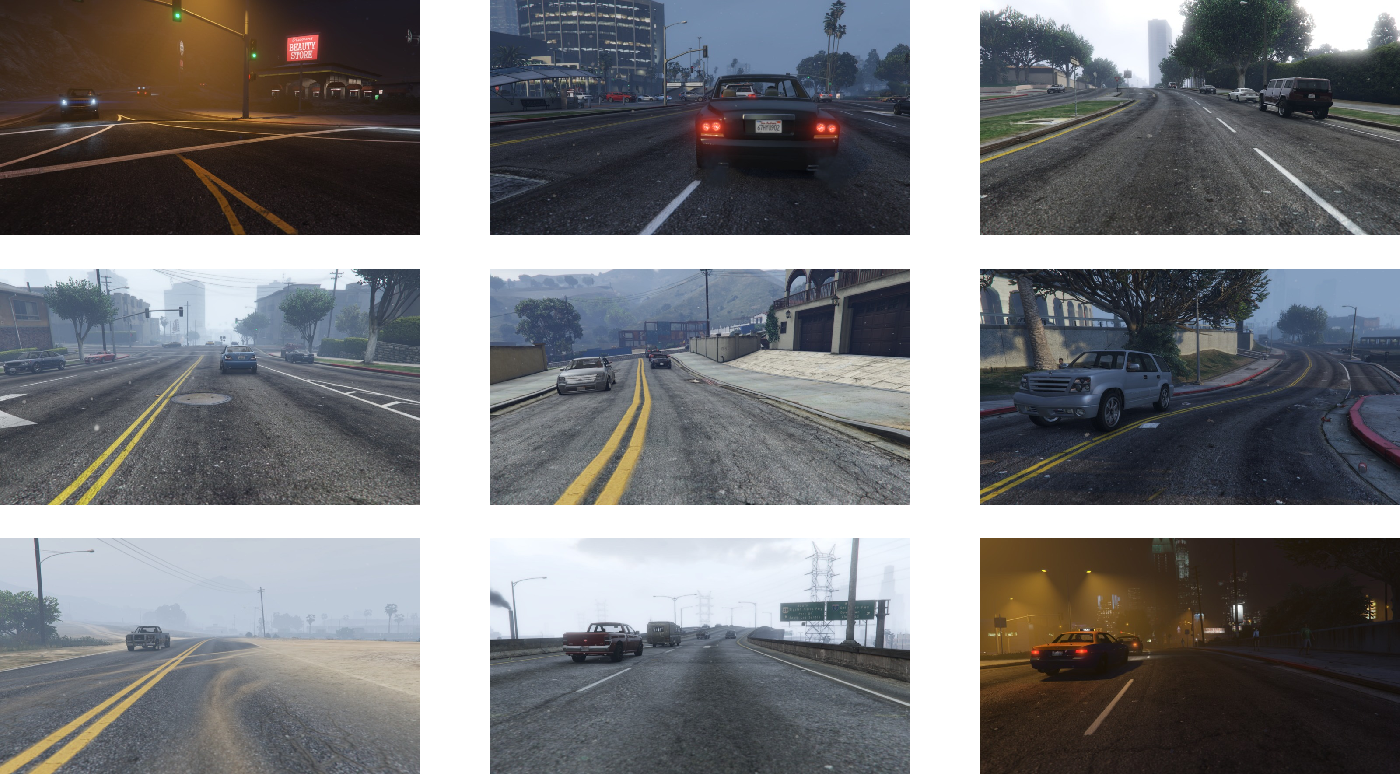

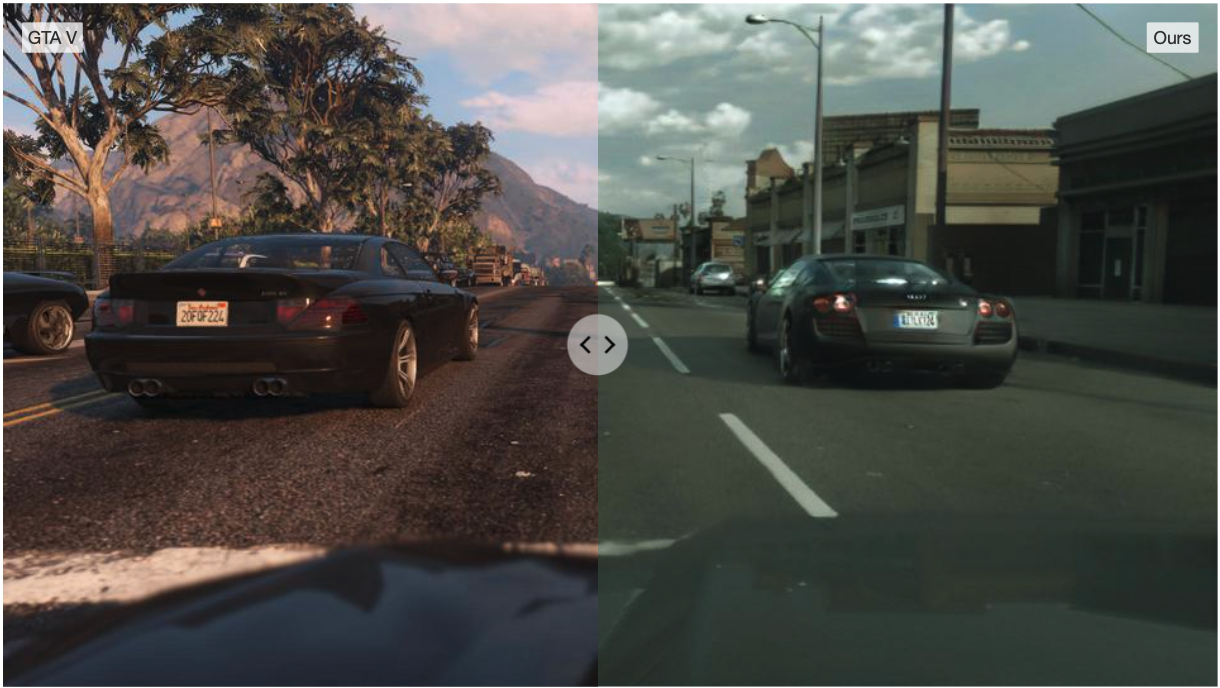

Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks?

How much realism do we need?

- Gathered high realism data from GTA V

- Trained a Faster-RCNN model for object detection on 10K, 20K and 50K synthetic images.

- Evaluated performance on KITTI against a model trained on Cityscape.

- Achieved high levels of performance on real-world data training only with synthetic examples

Photorrealistic data

- Number of synthetic images is significantly higher

- Lighting, color and texture variantion in the real world seems greater

- Performance increased by a significant jump from 10K to 50K examples

How much realism do we need?

"Unlike these approaches, ours allows the use of low-quality renderers optimized for speed and not carefully matched to real-world textures, lighting, and scene configurations."

Domain Randomization

Domain Randomization

Idea

Provide a wide simulated variability to synthetic data distribution so that the model is able to generalize to real world data.

– Try to make reality a subset of the model "knowledge"

Scene level Domain Randomization

- Number of objects

- Relative and absolute positions

- Number and shape of distractor objects

- Contents of the scene background

- Textures of all objects participating in the scene

- So on...

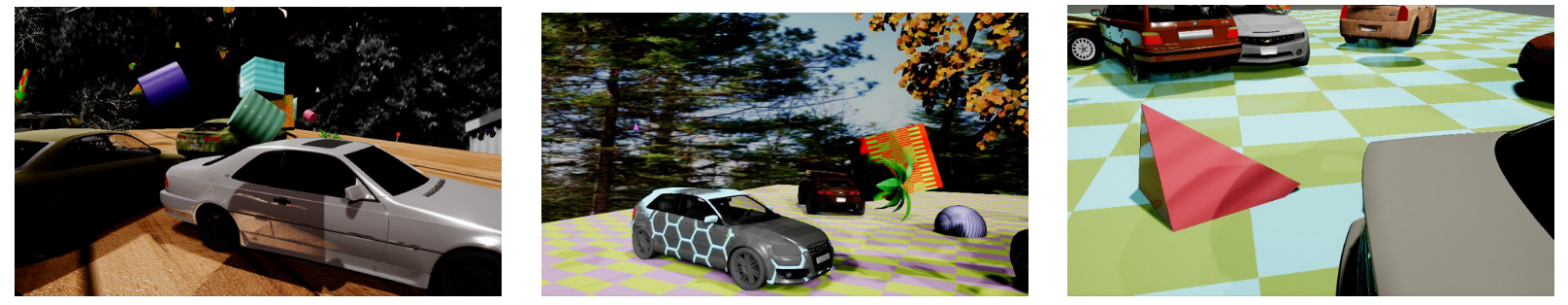

Distractors

-

Contextutal distractors: objetcs similar to possible real scene elements, positioned randomly but coherently

-

Flying distractors: geometric shapes with random texture, size, and position

Rendering Level Domain Randomization

- Lighting condition

- Rendering quality

- Rendering type (ray tracing, rasterization ...)

- Noise

- So on...

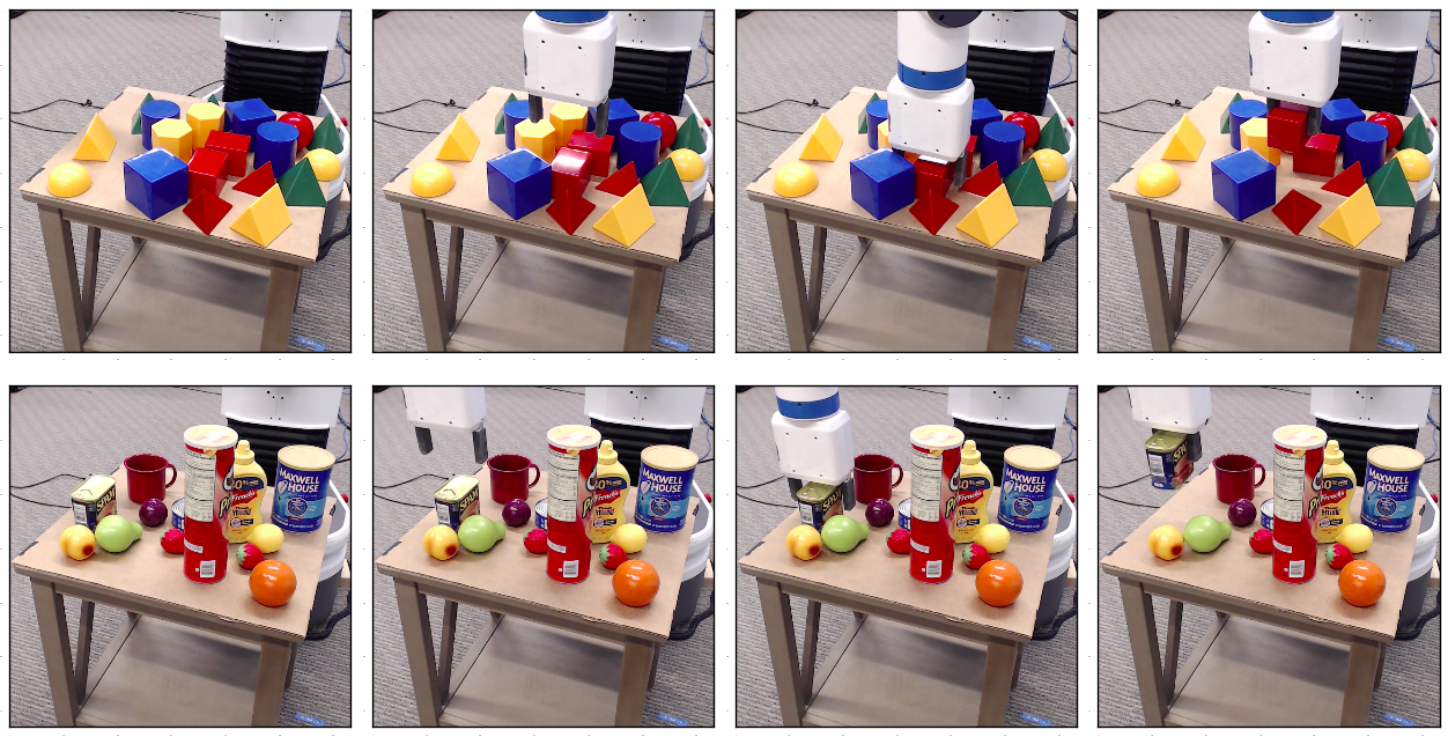

Training an object detection for grasping

- Applied domain randomization with distractors (different objects at test time)

- Detector trained with no prior information about the color of the target object

- Model high enough accuracy in the real world to perform grasping in clutter.

- Used a modified version of VGG16

Training an object detection for grasping

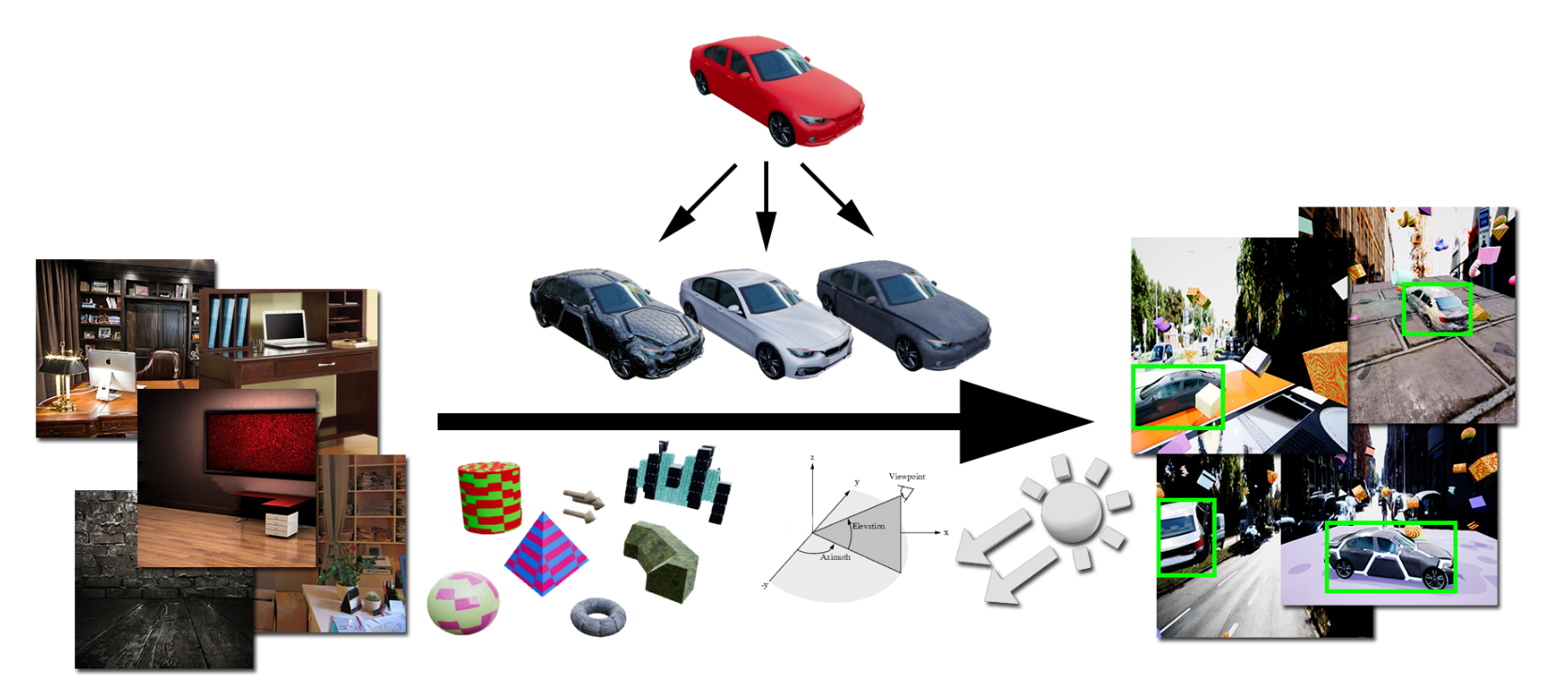

Training deep networks with synthetic data: Bridging the reality gap by domain randomization

Tremblay et al, 2018

- Number and types of objects

- 36 downloaded 3D models of generic sedan and hatch-back cars

- Number, types, colors, and scales of distractors, selected from a set of 3D models

- Texture and background photograph, both taken from the Flickr 8K dataset

- location of the virtual camera with respect to the scene

- Angle of the camera with respect to the scene

- Number and locations of lights (from 1 to 12)

- Visibility of the ground plane.

Fine Tuning

Tremblay et al, 2018

- Object detection on cars + Domain randomization

- Dataset cheaper to produce

- Improved performance with fine-tuning on real images

Tremblay et al, 2018

- Competitive results training only on synthetic dataset

- With additional fine-tuning on real data, the network yields better performance

Domain Randomization Strategy

An Annotation Saved is an Annotation Earned: Using Fully Synthetic Training for Object Instance Detection

- Retail objects detection

- Scene completely synthetic

- Two layers of objects: background and foreground

- Control over the statistics of the dataset

Dataset creation

How much effort?

Synthetic

- About 5 hours to scan all 64 foreground objects

Real

- 10 hours to acquire the real images

- 185 hours to label the training set + 6 hours for correction

What about the cost to increase dataset?

A strategy for generating synthetic data

- Controled size of the background

- Distribution of background colors

- Foreground models are presented to the network equally under all possible poses and conditions with increasing complexity

- Occlusion layer

Hinterstoisser et al, 2019

- Model trained only on synthetic data outperformed models trained on real data

- It works on challenging sistuations

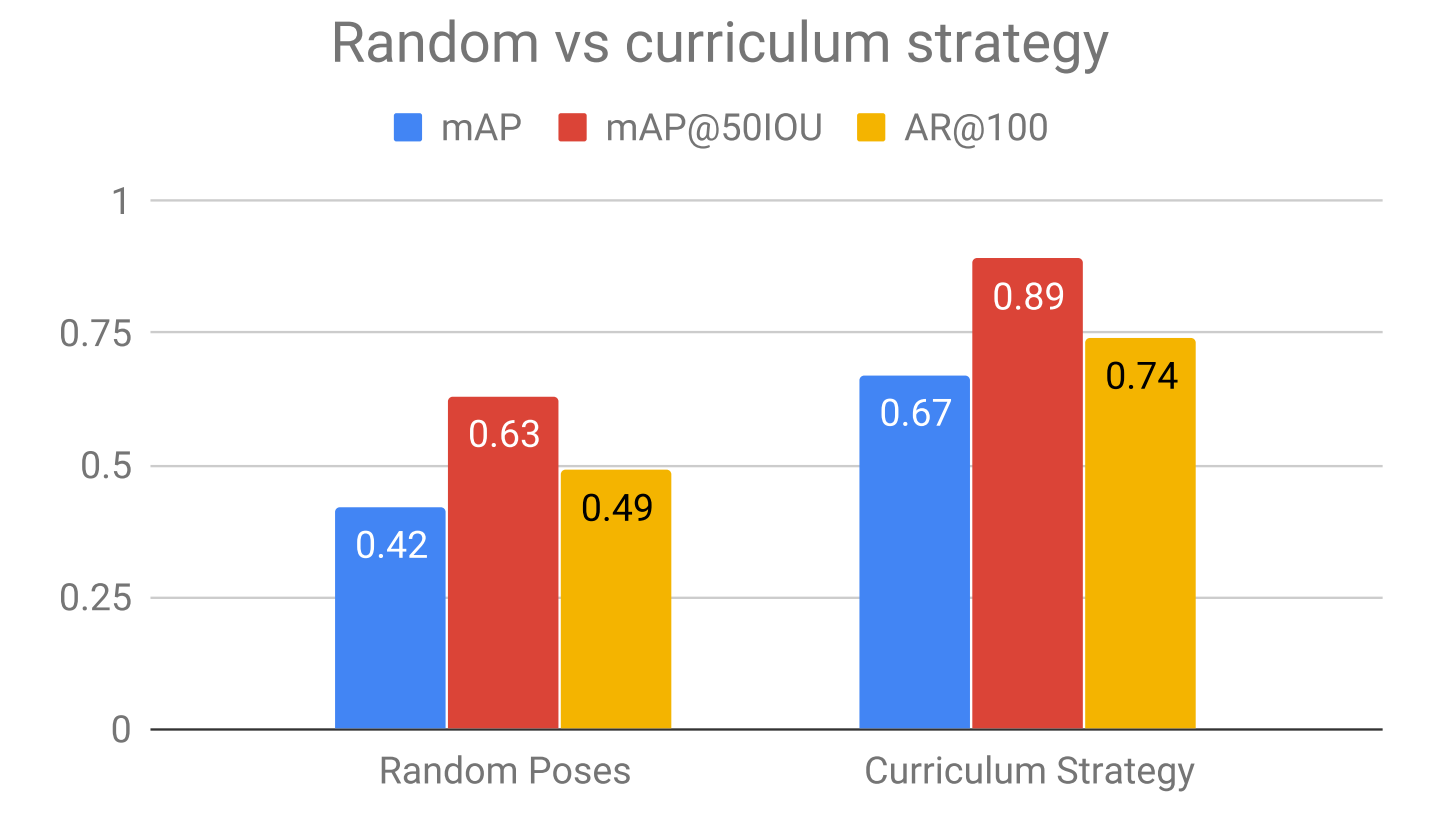

Curriculum vs Random pose generation

"Furthermore, we show through ablation experiments the benefits of curriculum vs random pose generation, the effects of relative scale of background objects with respect to foreground objects, the effects of the amount of foreground objects rendered per image, the benefits of using synthetic background objects, and finally the effects of random colors and blur."

Cut and Paste (image to image)

- Cut and paste from easy to challenging scenarios

- Semantic conditioning with GAN-based architecture

Domain Adaptation

Domain Adaptation

Domain adaptation is a set of techniques designed to make a model trained on one domain of data, the source domain, work well on a different, target domain.

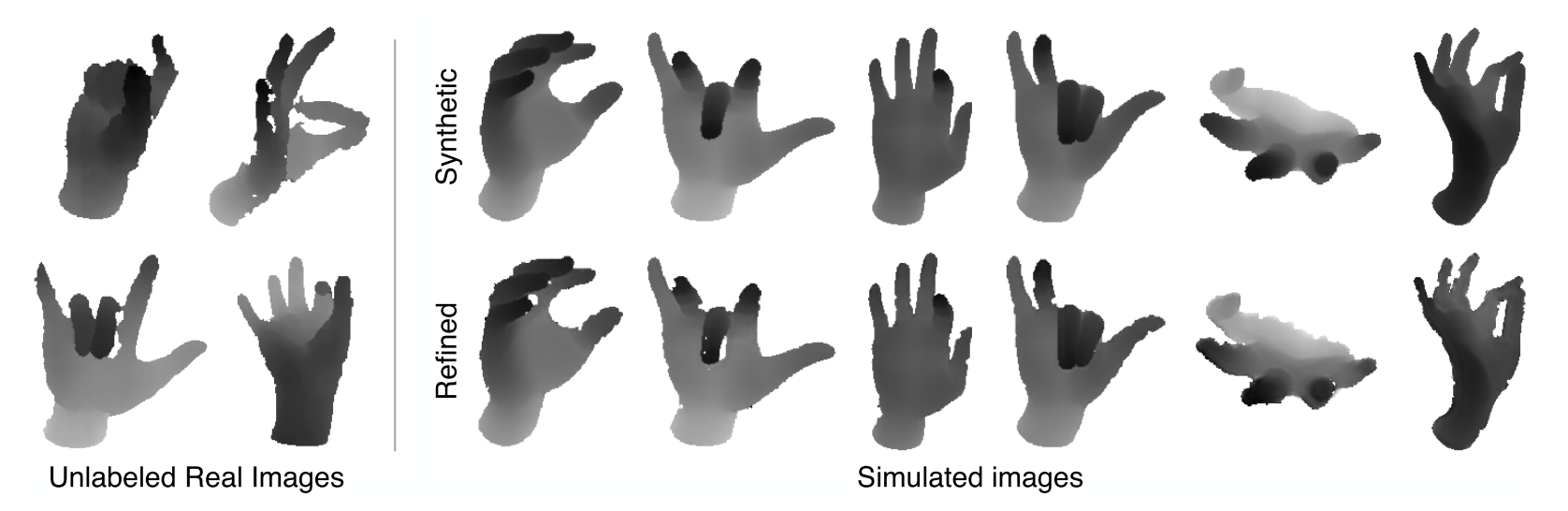

Synthetic to real refinement

Synthetic to real refinement

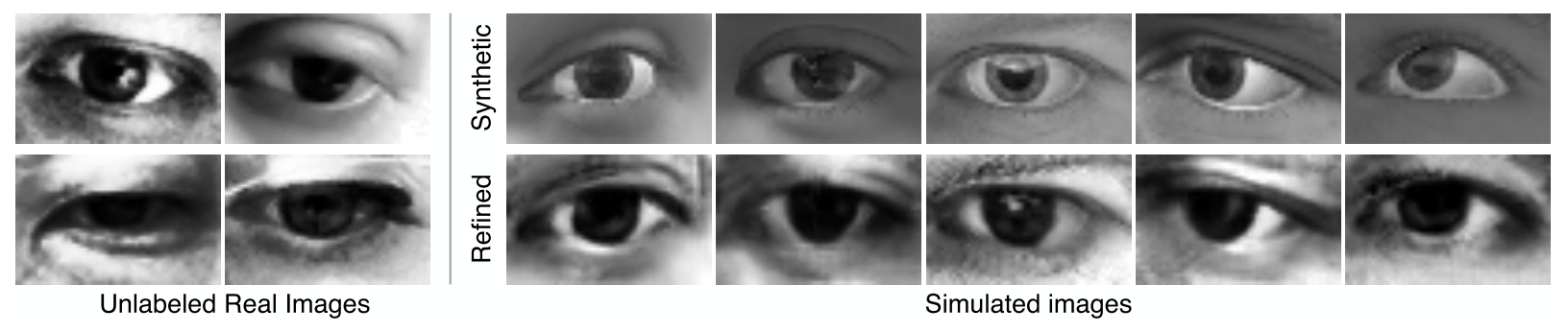

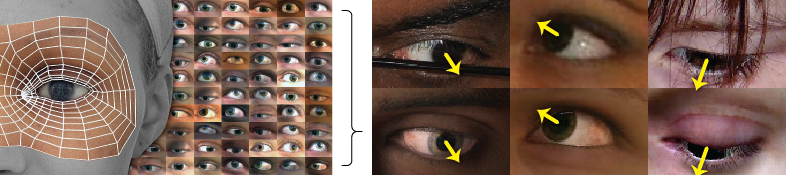

SimGAN: Simulation + Unsupervised Learning

- Learn a model to improve the realism of a simulator's output

- Simulated + Unsupervised Learning (no labels!)

- GANs conditioned on synthetic images

- Must preserve annotations

UnityEyes dataset

Annotating the eye images with a gaze direction vector is challenging

SimGAN

Training losses

SimGAN Results

– MPIIGaze dataset of real eyes

– Metric is mean eye gaze estimation error in deegree

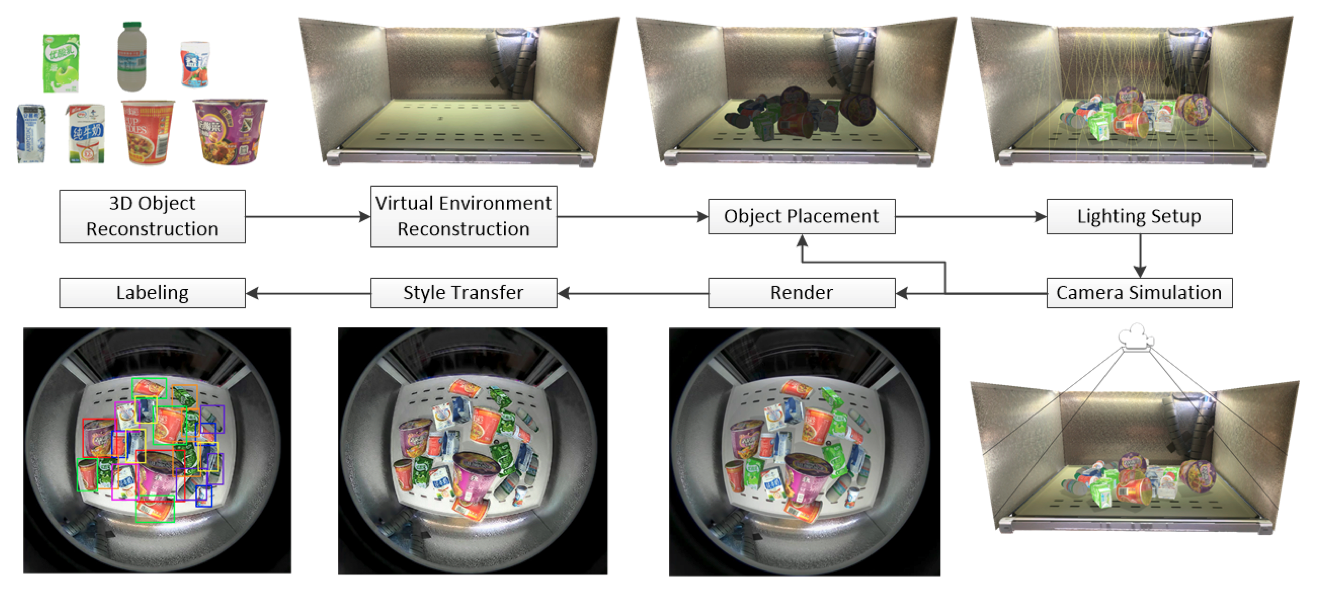

Synthetic data generation and adaption for object detection in smart vending machines

Wang et al 2019

- Object recognition inside an automatic vending machines

- Scanned objects + random deformations to the resulting 3D models

- Rendering with settings matching the fisheye cameras

- Refine rendered images with virtual-to-real style transfer done by a CycleGAN-based architecture.

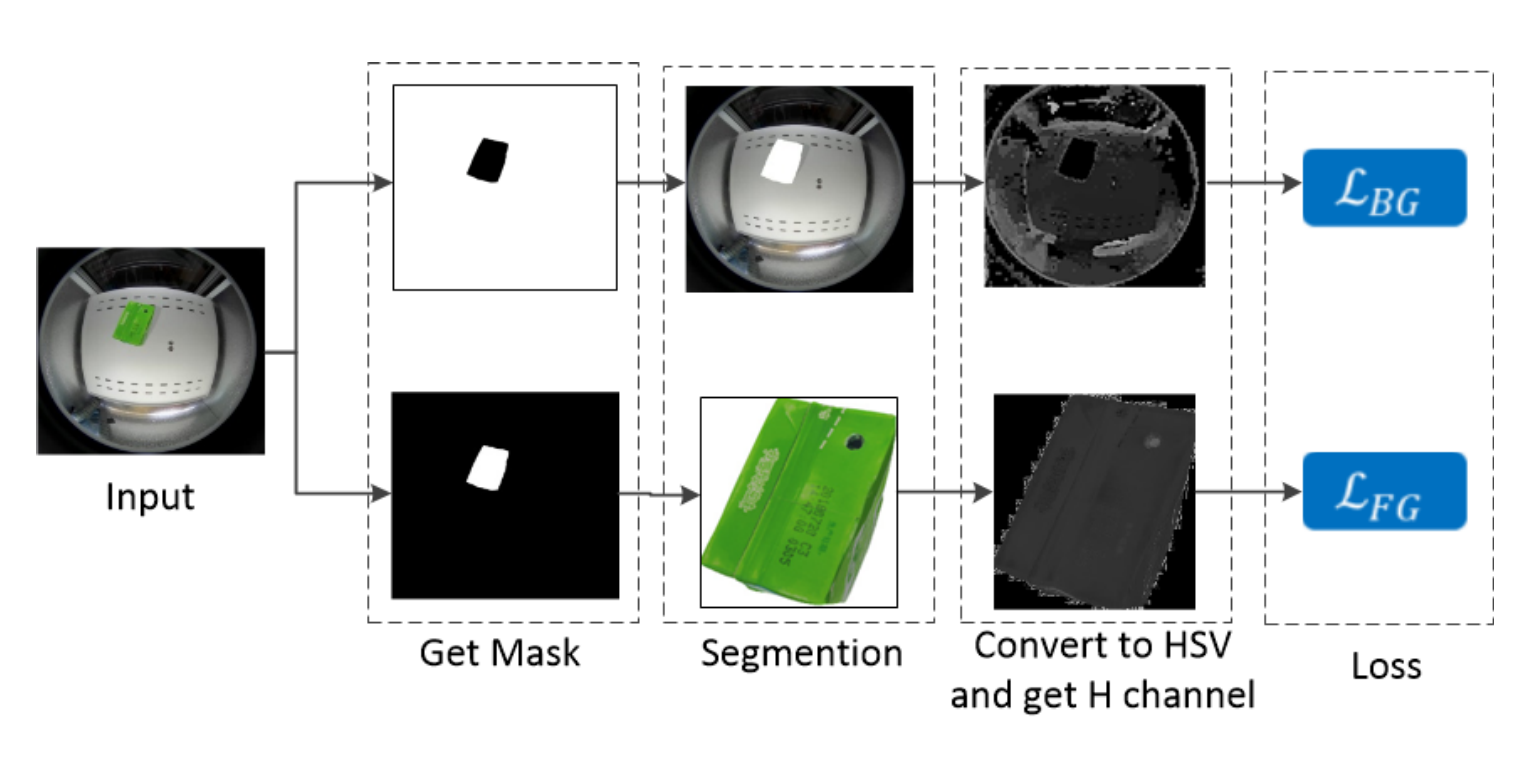

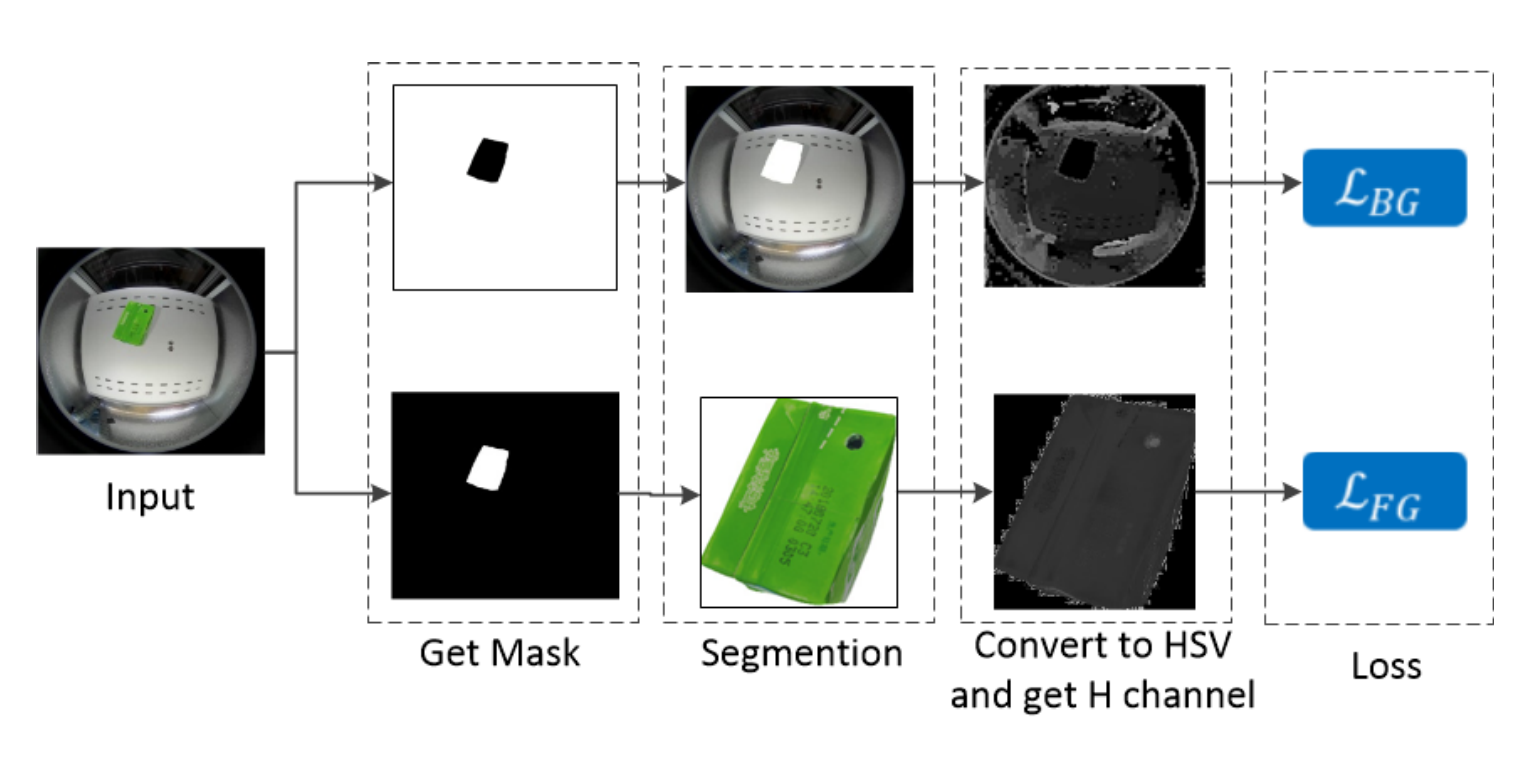

Wang et al 2019

- Novelty: separate foreground and background losses

- Style transfer needed for foreground objects stronger than the style transfer for backgrounds.

Wang et al 2019

- Segmentation in synthetic data: automatic

- For real data, a bit more of work...

Wang et al 2019

- Results improved when using hybrid datasets for all three tested object detection architectures: PVANET, SSD, and YOLOv3

- Comparison between basic and refined synthetic data shows gains achieved by refinement (PVANET)

Other approaches

Other interesting approaches

-

Randomize only within realistic ranges

- Quan Van Vuong, Sharad Vikram, Hao Su, Sicun Gao, and Henrik I. Christensen. How to pick the domain randomization parameters for sim- to-real transfer of reinforcement learning policies? ArXiv, abs/1903.11774, 2019

-

Domain adaptation at the feature/model level

- Artem Rozantsev, Vincent Lepetit, and Pascal Fua. On rendering synthetic images for training an object detector. Computer Vision and Image Understanding, 137:24 – 37, 2015

Conclusion

Conclusion

- Build large scale datasets is hard!

- Despite the reality gap, we don't necessarily need photorrealism

- Real data might help to improve performance

- How much effort to make it work well on real scenarios?

- Synthetic data will be even more important

- There are competitive results already

Wait...!!!

How can I do it?

Next week...

Generation of synthetic data for machine learning

1. Why does synthetic data matter?

2. How to generate synthetic data and train a model with it

3. Do they live in a simulation? Training models for dynamic environments

THANK YOU!

References:

- Nikolenko, Sergey; Synthetic Data for Deep learning; Arxiv, 2019.

- M. Johnson-Roberson, C. Barto, R. Mehta, S. N. Sridhar, K. Rosaen, and R. Vasudevan. Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks? In ICRA, 2017.

- M. Johnson-Roberson, C. Barto, R. Mehta, S. N. Sridhar, K. Rosaen, and R. Vasudevan. Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks? In ICRA, 2017

- Tobin J, Fong R, Ray A, Schneider J, Zaremba W, Abbeel P (2017) Domain randomization for transferring deep neural networks from simulation to the real world.2017 IEEE/RSJ Interna-tional Conference on Intelligent Robots and Systems (IROS):23–30.

References:

- J. Tremblay, A. Prakash, D. Acuna, M. Brophy, V. Jampani, C. Anil, T. To, E. Cameracci, S. Boochoon, and S. Birchfield. Training deep networks with synthetic data: Bridging the reality gap by domain randomization. In Workshop on Autonomous Driving, CVPR-Workshops, 2018.

- S. Hinterstoisser, O. Pauly, H. Heibel, M. Marek, and M. Bokeloh, “An annotation saved is an annotation earned: Using fully synthetic training for object detection,” in 2019 IEEE/CVF International Conference on Computer Vision Workshops.

- D. Dwibedi, I. Misra, and M. Hebert. Cut, paste and learn: Surprisingly easy synthesis for instance detection. In ICCV, 2017.

- Stephan R. Richter, Hassan Abu AlHaija, and Vladlen Koltun. "Enhancing Photorealism Enhancement" arXiv:2105.04619 (2021).

References:

- Ashish Shrivastava, Tomas Pfister, Oncel Tuzel, Joshua Susskind, Wenda Wang, Russell Webb; Learning from Simulated and Unsupervised Images through Adversarial Training; CVPR, 2017.

- E. Wood, T. Baltrušaitis, L. Morency, P. Robinson, and A. Bulling. Learning an appearance-based gaze estimator from one million synthesised images. In Proc. ACM Symposium on Eye Tracking Research & Applications, 2016.

- Kai Wang, Fuyuan Shi, Wenqi Wang, Yibing Nan, and Shiguo Lian. Synthetic data generation and adaption for object detection in smart vending machines. CoRR, abs/1904.12294, 2019